|

|

|

[Sponsors] | |||||

|

|

|

#1 |

|

New Member

Join Date: Mar 2013

Location: Canada

Posts: 22

Rep Power: 13  |

For context, for nearly two years I've been trying to figure out whether Fluent can exploit a GPU to speed up CFD calculations. More specifically, there are literature of "officially supported" GPU cards since V14.5; what I wanted to know is whether GPUs not specifically on the list would also work. When I first sought out an answer to this question, I was on V14.5 and made a move to V15. Just recently V16 has been released and via a training course I got to use it.

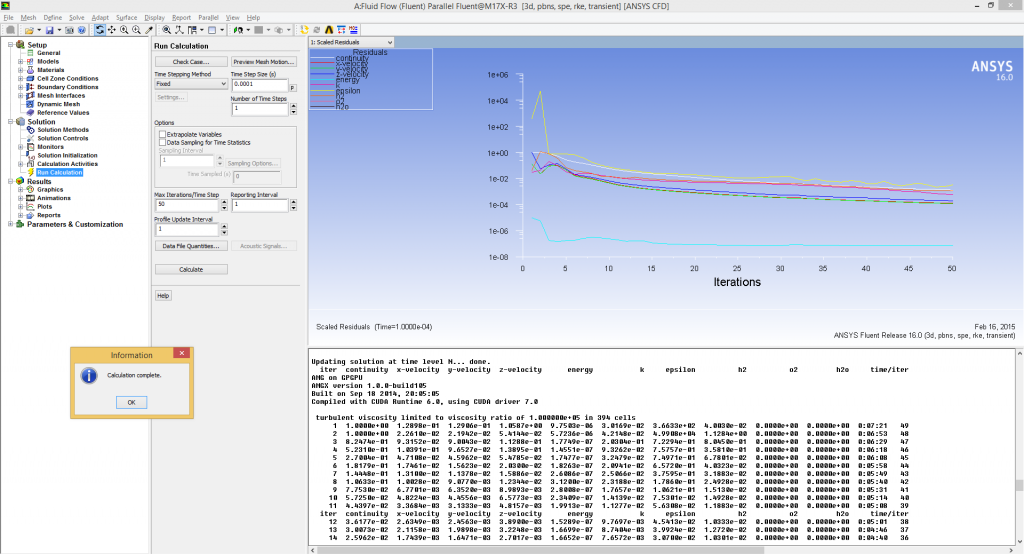

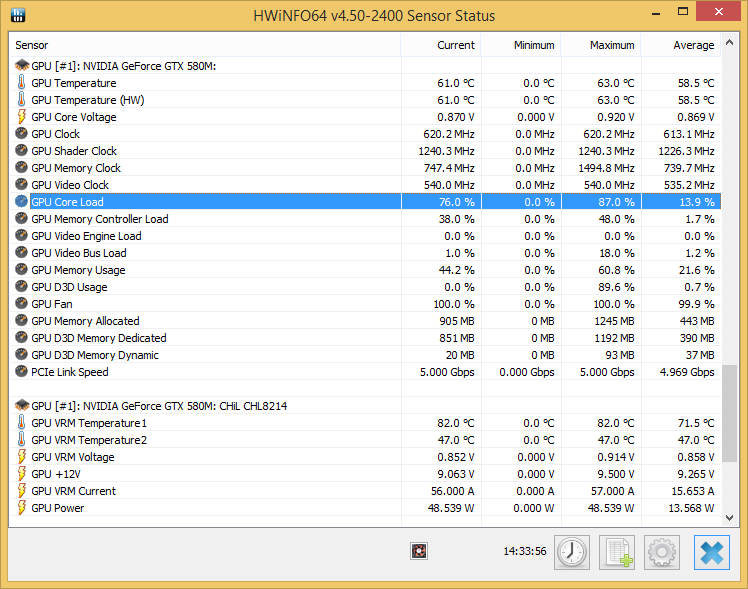

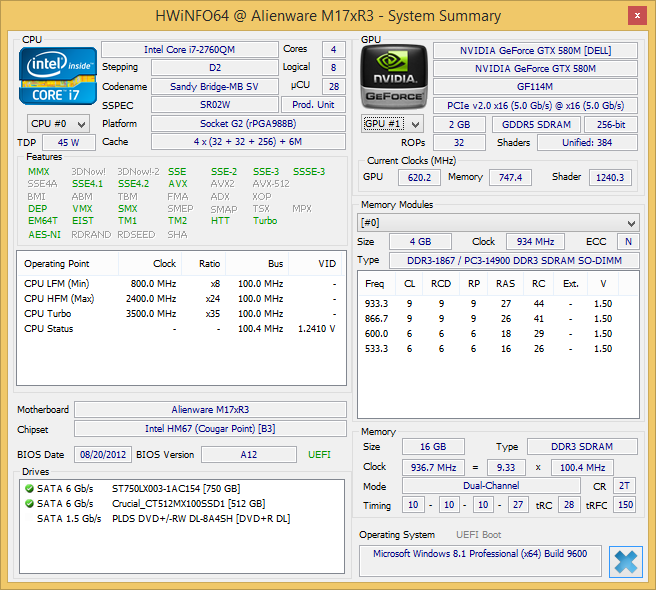

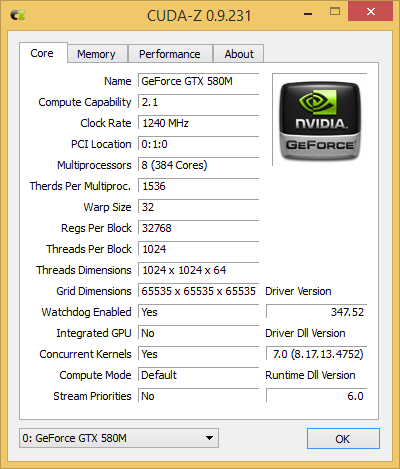

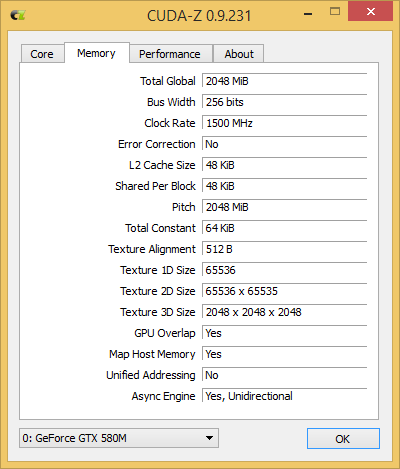

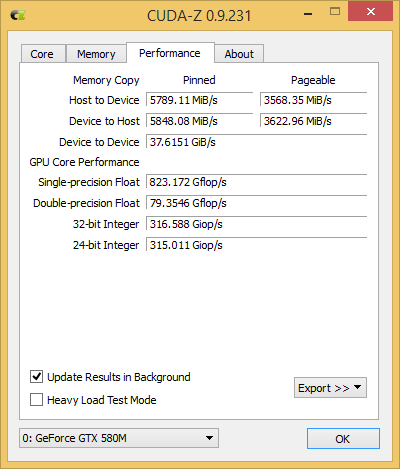

I can verify that V16 DOES make use of my GPU, an Nvidia GTX580M (GF114M) on my Alienware M17X-R3 (Core-i7 2760QM, 16GB RAM). For reference, here are some past threads discussing this subject: http://www.cfd-online.com/Forums/flu...nt-14-5-a.html http://www.cfd-online.com/Forums/flu...15-0-gpus.html http://www.cfd-online.com/Forums/flu...-gpu-mode.html http://www.cfd-online.com/Forums/flu...nt-14-5-a.html The catch is, to exploit the CPU, you MUST use the pressure-based Coupled pressure-velocity solver. Using the Simple coupling will not work. I have neither tried other pressure-velocity coupling methods, nor have I tried any of the density-based solvers. Unfortunately, with this combination, the solving times with the GPU enabled are actually HIGHER than without in every investigated case - disappointing indeed. I suspect that the old GPU architecture and slow transfers between the CPU and GPU -- my laptop uses a PCIe v2.0 x16 (5.0 Gb/s) connection -- might be the culprit. The following are results from running /parallel/timer/usage to perform 50 iterations of a single timestep in a transient internal combustion engine model with realizable k-epsilon. Some details of the model used is below and can additionally be seen in the screenshots. 990 hexahedral cells, zone 23, binary. 915 hexahedral cells, zone 24, binary. 291851 tetrahedral cells, zone 25, binary. 297414 tetrahedral cells, zone 2, binary. 10952 mixed wall faces, zone 35, binary. 325 mixed interior faces, zone 34, binary. 273 mixed interior faces, zone 30, binary. 2 mixed wall faces, zone 27, binary. 1908 mixed wall faces, zone 26, binary. 20454 mixed interior faces, zone 22, binary. 574422 triangular interior faces, zone 42, binary. 574148 triangular interior faces, zone 41, binary. 2129 quadrilateral interior faces, zone 40, binary. 25564 triangular wall faces, zone 39, binary. 7424 triangular wall faces, zone 38, binary. 1187 quadrilateral wall faces, zone 37, binary. 1182 quadrilateral wall faces, zone 3, binary. 30 quadrilateral pressure-outlet faces, zone 28, binary. 30 quadrilateral pressure-outlet faces, zone 29, binary. 1400 triangular velocity-inlet faces, zone 4, binary. 30 quadrilateral interface faces, zone 31, binary. 15 quadrilateral interface faces, zone 32, binary. 11120 triangular interface faces, zone 33, binary. 564 triangular interface faces, zone 5, binary. 13848 triangular interface faces, zone 6, binary. 2349 quadrilateral interior faces, zone 8, binary. 10952 interface face parents, binary. 325 interface face parents, binary. 273 interface face parents, binary. 2 interface face parents, binary. 1908 interface face parents, binary. 20454 interface face parents, binary. 10952 interface metric data, zone 35, binary. 325 interface metric data, zone 34, binary. 273 interface metric data, zone 30, binary. 2 interface metric data, zone 27, binary. 1908 interface metric data, zone 26, binary. 20454 interface metric data, zone 22, binary. 116500 nodes, binary. 116500 node flags, binary. Warning: this is a single-precision solver. The results: 1 CPU core (serial): 326.844 sec 1 CPU core (parallel), 0 GPU: 338.670 sec 1 CPU core (parallel), 1 GPU: 520.312 sec 2 CPU cores, 0 GPGPU: 198.150 sec 2 CPU cores, 1 GPGPU: 436.951 sec 4 CPU cores, 0 GPGPU: 144.391 sec 4 CPU cores, 1 GPGPU: 393.609 sec 6 CPU cores, 0 GPGPU: 141.466 sec 6 CPU cores, 1 GPGPU: 403.610 sec 8 CPU cores, 0 GPGPU: 338.819 sec 8 CPU cores, 1 GPGPU: 535.495 sec I know I don't have 8 physical CPU cores, but I'm surprised that these results were so bad, especially when the best overall result was with 6 cores (my CPU has 4 physical cores *2 logical ones by Hyperthreading). I think that part of the problem is that with all the cores nailed at maximum utilization, the CPU frequency has to be lowered. Also, the partitioning is less efficient under the default settings used: MPI Option Selected: pcmpi Selected system interconnect: default auto partitioning mesh by Metis (fast), So for your enlightening pleasure, here are some screenshots.       In the near future, I will be deploying a workstation with Haswell-E Xeon CPU and 2X Tesla K80 cards (not on the officially supported list). I'll report the results then. I know it doesn't help those still using V14.5 or V15, but V16 is already out and most institutions should be deploying it soon anyway. I can post a full text dump if it helps anyone. |

|

|

|

|

|

|

|

|

#2 |

|

New Member

Richard Gibbons

Join Date: Apr 2015

Posts: 2

Rep Power: 0  |

thanks you very much for the info!

if you can, you should do a similar test using a double precision friendly card. |

|

|

|

|

|

|

|

|

#3 |

|

New Member

Dustin

Join Date: Sep 2011

Location: Earth

Posts: 23

Rep Power: 14  |

I do appreciate your test!

|

|

|

|

|

|

|

|

|

#4 | |

|

New Member

Join Date: Mar 2013

Location: Canada

Posts: 22

Rep Power: 13  |

Quote:

|

||

|

|

|

||

|

|

|

#5 |

|

New Member

QunfengZou

Join Date: Dec 2017

Posts: 1

Rep Power: 0  |

So, what is going wrong?

|

|

|

|

|

|

|

| Thread Tools | Search this Thread |

| Display Modes | |

|

|

Similar Threads

Similar Threads

|

||||

| Thread | Thread Starter | Forum | Replies | Last Post |

| Two questions on Fluent UDF | Steven | Fluent UDF and Scheme Programming | 7 | March 23, 2018 03:22 |

| heat transfer with RANS wall function, over a flat plate (validation with fluent) | bruce | OpenFOAM Running, Solving & CFD | 6 | January 20, 2017 06:22 |

| Error using Fluent 15 and GPU card | David Christopher | FLUENT | 1 | June 26, 2014 18:35 |

| How to enable GPU computing using Fluent 14.5? | epc1 | FLUENT | 1 | January 4, 2013 13:19 |

| Problems in lauching FLUENT | Lourival | FLUENT | 3 | January 16, 2008 16:48 |