Jacobi method

From CFD-Wiki

(fixed dot product notation) |

|||

| (8 intermediate revisions not shown) | |||

| Line 1: | Line 1: | ||

| + | The Jacobi method is an algorithm in linear algebra for determining the solutions of a system of linear equations with largest absolute values in each row and column dominated by the diagonal element. Each diagonal element is solved for, and an approximate value plugged in. The process is then iterated until it converges. This algorithm is a stripped-down version of the Jacobi transformation method of matrix diagonalization. The method is named after German mathematician [http://en.wikipedia.org/wiki/Carl_Gustav_Jakob_Jacobi Carl Gustav Jakob Jacobi]. | ||

| + | |||

We seek the solution to set of linear equations: <br> | We seek the solution to set of linear equations: <br> | ||

| - | :<math> A \ | + | :<math> A \phi = b </math> <br> |

| - | |||

In matrix terms, the definition of the Jacobi method can be expressed as : <br> | In matrix terms, the definition of the Jacobi method can be expressed as : <br> | ||

| - | <math> | + | :<math> |

| - | + | \phi^{(k+1)} = D^{ - 1} \left[\left( {L + U} \right)\phi^{(k)} + b\right] | |

</math><br> | </math><br> | ||

| - | |||

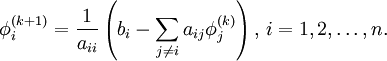

| - | == | + | where <math>D</math>, <math>L</math>, and <math>U</math> represent the diagonal, lower triangular, and upper triangular parts of the coefficient matrix <math>A</math> and <math>k</math> is the iteration count. This matrix expression is mainly of academic interest, and is not used to program the method. Rather, an element-based approach is used: |

| - | ---- | + | |

| - | + | :<math> | |

| - | : for k := 1 step 1 | + | \phi^{(k+1)}_i = \frac{1}{a_{ii}} \left(b_i -\sum_{j\ne i}a_{ij}\phi^{(k)}_j\right),\, i=1,2,\ldots,n. |

| + | </math> | ||

| + | |||

| + | Note that the computation of <math>\phi^{(k+1)}_i</math> requires each element in <math>\phi^{(k)}</math> except itself. Then, unlike in the [[Gauss-Seidel method]], we can't overwrite <math>\phi^{(k)}_i</math> with <math>\phi^{(k+1)}_i</math>, as that value will be needed by the rest of the computation. This difference between the Jacobi and Gauss-Seidel methods complicates matters somewhat. Generally, two vectors of size <math>n</math> will be needed, and a vector-to-vector copy will be required. If the form of <math>A</math> is known (e.g. tridiagonal), then the additional storage should be avoidable with careful coding. | ||

| + | |||

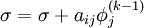

| + | == Algorithm == | ||

| + | Choose an initial guess <math>\phi^{0}</math> to the solution <br> | ||

| + | : for k := 1 step 1 until convergence do <br> | ||

:: for i := 1 step until n do <br> | :: for i := 1 step until n do <br> | ||

::: <math> \sigma = 0 </math> <br> | ::: <math> \sigma = 0 </math> <br> | ||

::: for j := 1 step until n do <br> | ::: for j := 1 step until n do <br> | ||

:::: if j != i then | :::: if j != i then | ||

| - | ::::: <math> \sigma = \sigma + a_{ij} | + | ::::: <math> \sigma = \sigma + a_{ij} \phi_j^{(k-1)} </math> |

:::: end if | :::: end if | ||

::: end (j-loop) <br> | ::: end (j-loop) <br> | ||

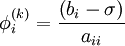

| - | ::: <math> | + | ::: <math> \phi_i^{(k)} = {{\left( {b_i - \sigma } \right)} \over {a_{ii} }} </math> |

:: end (i-loop) | :: end (i-loop) | ||

:: check if convergence is reached | :: check if convergence is reached | ||

: end (k-loop) | : end (k-loop) | ||

| - | |||

| - | + | ==Convergence== | |

| + | The method will always converge if the matrix A is strictly or irreducibly diagonally dominant. Strict row diagonal dominance means that for each row, the absolute value of the diagonal term is greater than the sum of absolute values of other terms: | ||

| + | |||

| + | :<math>\left | a_{ii} \right | > \sum_{i \ne j} {\left | a_{ij} \right |} </math> | ||

| + | |||

| + | The Jacobi method sometimes converges even if this condition is not satisfied. It is necessary, however, that the diagonal terms in the matrix are greater (in magnitude) than the other terms. | ||

| + | |||

| + | == Example Calculation == | ||

| + | As with [[Gauss-Seidel method|Gauss-Seidel]], Jacobi iteration lends itself to situations in which we need not explicitly represent the matrix. Consider the simple heat equation problem | ||

| + | |||

| + | :<math>\nabla^2 T(x) = 0,\ x\in [0,1]</math> | ||

| + | |||

| + | subject to the boundary conditions <math>T(0)=0</math> and <math>T(1)=1</math>. The exact solution to this problem is <math>T(x)=x</math>. The standard second-order finite difference discretization is | ||

| + | |||

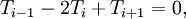

| + | :<math> T_{i-1}-2T_i+T_{i+1} = 0,</math> | ||

| + | |||

| + | where <math>T_i</math> is the (discrete) solution available at uniformly spaced nodes (see [[Gauss-Seidel method#Example Calculation|the Gauss-Seidel example]] for the matrix form). For any given <math>T_i</math> for <math>1 < i < n</math>, we can write | ||

| + | |||

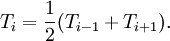

| + | :<math> T_i = \frac{1}{2}(T_{i-1}+T_{i+1}).</math> | ||

| + | |||

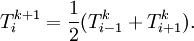

| + | Then, stepping through the solution vector from <math>i=2</math> to <math>i=n-1</math>, we can compute the next iterate from the two surrounding values. For a proper Jacobi iteration, we'll need to use values from the previous iteration on the right-hand side: | ||

| + | |||

| + | :<math> T_i^{k+1} = \frac{1}{2}(T_{i-1}^{k}+T_{i+1}^k).</math> | ||

| + | |||

| + | The following table gives the results of 10 iterations with 5 nodes (3 interior and 2 boundary) as well as <math>L_2</math> norm error. | ||

| + | {| align=center border=1 | ||

| + | |+ Jacobi Solution | ||

| + | ! Iteration !! <math>T_1</math> !! <math>T_2</math> !! <math>T_3</math> !! <math>T_4</math> !! <math>T_5</math> !! <math>L_2</math> error | ||

| + | |- | ||

| + | | 0 | ||

| + | | 0.0000E+00 | ||

| + | | 0.0000E+00 | ||

| + | | 0.0000E+00 | ||

| + | | 0.0000E+00 | ||

| + | | 1.0000E+00 | ||

| + | | 1.0000E+00 | ||

| + | |- | ||

| + | | 1 | ||

| + | | 0.0000E+00 | ||

| + | | 0.0000E+00 | ||

| + | | 0.0000E+00 | ||

| + | | 5.0000E-01 | ||

| + | | 1.0000E+00 | ||

| + | | 6.1237E-01 | ||

| + | |- | ||

| + | | 2 | ||

| + | | 0.0000E+00 | ||

| + | | 0.0000E+00 | ||

| + | | 2.5000E-01 | ||

| + | | 5.0000E-01 | ||

| + | | 1.0000E+00 | ||

| + | | 4.3301E-01 | ||

| + | |- | ||

| + | | 3 | ||

| + | | 0.0000E+00 | ||

| + | | 1.2500E-01 | ||

| + | | 2.5000E-01 | ||

| + | | 6.2500E-01 | ||

| + | | 1.0000E+00 | ||

| + | | 3.0619E-01 | ||

| + | |- | ||

| + | | 4 | ||

| + | | 0.0000E+00 | ||

| + | | 1.2500E-01 | ||

| + | | 3.7500E-01 | ||

| + | | 6.2500E-01 | ||

| + | | 1.0000E+00 | ||

| + | | 2.1651E-01 | ||

| + | |- | ||

| + | | 5 | ||

| + | | 0.0000E+00 | ||

| + | | 1.8750E-01 | ||

| + | | 3.7500E-01 | ||

| + | | 6.8750E-01 | ||

| + | | 1.0000E+00 | ||

| + | | 1.5309E-01 | ||

| + | |- | ||

| + | | 6 | ||

| + | | 0.0000E+00 | ||

| + | | 1.8750E-01 | ||

| + | | 4.3750E-01 | ||

| + | | 6.8750E-01 | ||

| + | | 1.0000E+00 | ||

| + | | 1.0825E-01 | ||

| + | |- | ||

| + | | 7 | ||

| + | | 0.0000E+00 | ||

| + | | 2.1875E-01 | ||

| + | | 4.3750E-01 | ||

| + | | 7.1875E-01 | ||

| + | | 1.0000E+00 | ||

| + | | 7.6547E-02 | ||

| + | |- | ||

| + | | 8 | ||

| + | | 0.0000E+00 | ||

| + | | 2.1875E-01 | ||

| + | | 4.6875E-01 | ||

| + | | 7.1875E-01 | ||

| + | | 1.0000E+00 | ||

| + | | 5.4127E-02 | ||

| + | |- | ||

| + | | 9 | ||

| + | | 0.0000E+00 | ||

| + | | 2.3438E-01 | ||

| + | | 4.6875E-01 | ||

| + | | 7.3438E-01 | ||

| + | | 1.0000E+00 | ||

| + | | 3.8273E-02 | ||

| + | |- | ||

| + | | 10 | ||

| + | | 0.0000E+00 | ||

| + | | 2.3438E-01 | ||

| + | | 4.8438E-01 | ||

| + | | 7.3438E-01 | ||

| + | | 1.0000E+00 | ||

| + | | 2.7063E-02 | ||

| + | |} | ||

| - | -- | + | ==External links== |

| - | + | *[http://www.math-linux.com/spip.php?article49 Jacobi method from www.math-linux.com] | |

| + | *[http://mathworld.wolfram.com/JacobiMethod.html Jacobi Method at Math World] | ||

| + | *[http://en.wikipedia.org/wiki/Jacobi_method Jacobi method at Wikipedia] | ||

Latest revision as of 09:15, 3 January 2012

The Jacobi method is an algorithm in linear algebra for determining the solutions of a system of linear equations with largest absolute values in each row and column dominated by the diagonal element. Each diagonal element is solved for, and an approximate value plugged in. The process is then iterated until it converges. This algorithm is a stripped-down version of the Jacobi transformation method of matrix diagonalization. The method is named after German mathematician Carl Gustav Jakob Jacobi.

We seek the solution to set of linear equations:

In matrix terms, the definition of the Jacobi method can be expressed as :

where  ,

,  , and

, and  represent the diagonal, lower triangular, and upper triangular parts of the coefficient matrix

represent the diagonal, lower triangular, and upper triangular parts of the coefficient matrix  and

and  is the iteration count. This matrix expression is mainly of academic interest, and is not used to program the method. Rather, an element-based approach is used:

is the iteration count. This matrix expression is mainly of academic interest, and is not used to program the method. Rather, an element-based approach is used:

Note that the computation of  requires each element in

requires each element in  except itself. Then, unlike in the Gauss-Seidel method, we can't overwrite

except itself. Then, unlike in the Gauss-Seidel method, we can't overwrite  with

with  , as that value will be needed by the rest of the computation. This difference between the Jacobi and Gauss-Seidel methods complicates matters somewhat. Generally, two vectors of size

, as that value will be needed by the rest of the computation. This difference between the Jacobi and Gauss-Seidel methods complicates matters somewhat. Generally, two vectors of size  will be needed, and a vector-to-vector copy will be required. If the form of

will be needed, and a vector-to-vector copy will be required. If the form of  is known (e.g. tridiagonal), then the additional storage should be avoidable with careful coding.

is known (e.g. tridiagonal), then the additional storage should be avoidable with careful coding.

Contents |

Algorithm

Choose an initial guess  to the solution

to the solution

- for k := 1 step 1 until convergence do

- for i := 1 step until n do

-

- for j := 1 step until n do

- if j != i then

-

- end if

- if j != i then

- end (j-loop)

-

-

- end (i-loop)

- check if convergence is reached

- for i := 1 step until n do

- end (k-loop)

Convergence

The method will always converge if the matrix A is strictly or irreducibly diagonally dominant. Strict row diagonal dominance means that for each row, the absolute value of the diagonal term is greater than the sum of absolute values of other terms:

The Jacobi method sometimes converges even if this condition is not satisfied. It is necessary, however, that the diagonal terms in the matrix are greater (in magnitude) than the other terms.

Example Calculation

As with Gauss-Seidel, Jacobi iteration lends itself to situations in which we need not explicitly represent the matrix. Consider the simple heat equation problem

subject to the boundary conditions  and

and  . The exact solution to this problem is

. The exact solution to this problem is  . The standard second-order finite difference discretization is

. The standard second-order finite difference discretization is

where  is the (discrete) solution available at uniformly spaced nodes (see the Gauss-Seidel example for the matrix form). For any given

is the (discrete) solution available at uniformly spaced nodes (see the Gauss-Seidel example for the matrix form). For any given  for

for  , we can write

, we can write

Then, stepping through the solution vector from  to

to  , we can compute the next iterate from the two surrounding values. For a proper Jacobi iteration, we'll need to use values from the previous iteration on the right-hand side:

, we can compute the next iterate from the two surrounding values. For a proper Jacobi iteration, we'll need to use values from the previous iteration on the right-hand side:

The following table gives the results of 10 iterations with 5 nodes (3 interior and 2 boundary) as well as  norm error.

norm error.

| Iteration |  |  |  |  |  |  error error

|

|---|---|---|---|---|---|---|

| 0 | 0.0000E+00 | 0.0000E+00 | 0.0000E+00 | 0.0000E+00 | 1.0000E+00 | 1.0000E+00 |

| 1 | 0.0000E+00 | 0.0000E+00 | 0.0000E+00 | 5.0000E-01 | 1.0000E+00 | 6.1237E-01 |

| 2 | 0.0000E+00 | 0.0000E+00 | 2.5000E-01 | 5.0000E-01 | 1.0000E+00 | 4.3301E-01 |

| 3 | 0.0000E+00 | 1.2500E-01 | 2.5000E-01 | 6.2500E-01 | 1.0000E+00 | 3.0619E-01 |

| 4 | 0.0000E+00 | 1.2500E-01 | 3.7500E-01 | 6.2500E-01 | 1.0000E+00 | 2.1651E-01 |

| 5 | 0.0000E+00 | 1.8750E-01 | 3.7500E-01 | 6.8750E-01 | 1.0000E+00 | 1.5309E-01 |

| 6 | 0.0000E+00 | 1.8750E-01 | 4.3750E-01 | 6.8750E-01 | 1.0000E+00 | 1.0825E-01 |

| 7 | 0.0000E+00 | 2.1875E-01 | 4.3750E-01 | 7.1875E-01 | 1.0000E+00 | 7.6547E-02 |

| 8 | 0.0000E+00 | 2.1875E-01 | 4.6875E-01 | 7.1875E-01 | 1.0000E+00 | 5.4127E-02 |

| 9 | 0.0000E+00 | 2.3438E-01 | 4.6875E-01 | 7.3438E-01 | 1.0000E+00 | 3.8273E-02 |

| 10 | 0.0000E+00 | 2.3438E-01 | 4.8438E-01 | 7.3438E-01 | 1.0000E+00 | 2.7063E-02 |

![\phi^{(k+1)} = D^{ - 1} \left[\left( {L + U} \right)\phi^{(k)} + b\right]](/W/images/math/2/5/1/251a2f655111e185ad6346ba43ad3117.png)

![\nabla^2 T(x) = 0,\ x\in [0,1]](/W/images/math/6/8/1/681af3c6083368314f689c744b5b198c.png)