|

|

|

[Sponsors] | |||||

|

|

|

#241 |

|

Senior Member

Will Kernkamp

Join Date: Jun 2014

Posts: 315

Rep Power: 12  |

Geekbench 4 is better. It includes memory bandwidth testing. (The Epyc has almost twice the score on bandwidth.) Did not find results for the newest processors. Therefore a comparison between the previous generations Epyc/Threadripper:

Processor 7401P 2970WX Single-Core Score 3619 4509 Multi-Core Score 58498 55139 Single-Core Bandwith Score 7401P 2970WX 4378 2701 23.4 GB/sec 14.4 GB/sec Multi-Core Bandwith Score 7401P 2970WX 8745 4577 46.7 GB/sec 24.4 GB/sec |

|

|

|

|

|

|

|

|

#242 | |

|

New Member

anonymous

Join Date: Oct 2019

Posts: 4

Rep Power: 6  |

Quote:

The following results with the 2X EPYC 7302 were obtained with NPS4 set in the bios: # cores Wall time (s): ------------------------ 8 79.41 16 41.55 32 26.53 SLC, Unfortunately I don't have access to Fluent. |

||

|

|

|

||

|

|

|

#243 |

|

New Member

Clément

Join Date: Dec 2019

Location: France

Posts: 6

Rep Power: 6  |

Hello,

I have a question that is at a very hard level : (If there is a need to add shemas or diagrams to illustrate my interrogation and assumption to make it clearer I can add some, because I have some in my computers) What i can notice all along this thread, as a personal conclusion, is that to ensure the best performances of cfd calculation on a cluster it is better to in a way or another having the number of cores of CPUs equal to the total number of memory channels. In this way I am sure there will be absolutely no bottleneck in the performances in having a hardware ratio of "1 CPU core / 1 memory channel". Indeed the results of this benchmark show that almost 100% of the dual socket epyc tests that have been made in this thread result to : Speedup of the calculation = number of cpu cores used (when number of cores = or < number of memory channels) And Speedup of the calculation starts to become < number of cpu cores used (when number of cores > number of memory channels) Right ? :-). And so that the speedup results of allmost all the dual socket epyc tests were about at least 16 when 16 cores were used. And even a speedup about more than 32 with 32 cores of calculation with the 2x2 epyc connected with infiniband test of havref ... And this is because all epyc processors have 8 memory channels each. (even if I must admit that I do not understand why in reality your results often even respectively exceed the value of 16 and 32 for current dual epyc tests and for the 2x2 epyc tests of havref, but this is an other story, and I don't want to add difficulties to the current reasoning problem ...) But we also have seen that when number of cores becomes > number of memory channels, then a stall suddenly appears in the speedup results and a small decrease of the speedup value starts becoming bigger and bigger all along the number of cpu cores increases... But I have a question as the version of PCI-E 3.0 is going to change through the PCI-E 4.0 : Is that going to change in some way that general rule of performance that I am describing to you in this post ? As the bandwidth of the new bus is going to be multiplied by 2, does it means that the new rule of performance with PCI-E 4.0 will become : Speedup of the calculation = number of cpu cores used (when number of cores = or < 2 x number of memory channels) Because of a new bandwidth twice larger than the previous one with PCI-E 4.0. To some extent my question could be : is the RAM bandwidth will also be multiplied by 2 all along the path of the datas between the cpu and the ram (all along the path...) ? And is this bandwidth increase can be to some extent a substitution to the half of the "channel memory" well known problem ... I know that this is a really hard question what I am asking. So I don't want to force anyone to respond to it. Thank you all Maphteach Last edited by Maphteach; December 21, 2019 at 12:22. |

|

|

|

|

|

|

|

|

#244 | |

|

Super Moderator

Alex

Join Date: Jun 2012

Location: Germany

Posts: 3,399

Rep Power: 46   |

Quote:

The integrated memory controller acts as an additional layer, and handles memory access for all cores associated to it. Or more precisely: for all memory attached to it. The reason for the slowdown is much simpler: with more cores processing the calculations faster, thus more data per unit of time has to be fed to the CPU cores. The memory bandwidth becomes the bottleneck. On top of that, memory latency increases with higher load on the memory subsystem. As for the PCIe topic: this has nothing to do with system memory. PCIe is used to connect stuff like graphics cards or NVMe SSDs. Integrated memory controllers, as the name suggests, are integrated into the CPU itself. They do not communicate with CPU cores via PCIe. New versions of the PCIe standard will have no influence on system memory bandwidth or latency. |

||

|

|

|

||

|

|

|

#245 | ||

|

New Member

Clément

Join Date: Dec 2019

Location: France

Posts: 6

Rep Power: 6  |

Thank you for your fast response flotus1, I will read it carefully in order to understand well ...

Maphteach 1st update: Quote:

Anyway this is what I conclude when I see the trends of the results of your tests and benchmark and I find they encouraging in the way that we can understand what increase the performance of the speedup thanks to they. And I note that the Epyc CPUs communicate each other really faster than the intel CPUs and I think this is really noteworthy ... Because communication between CPUs and nodes is a really important parameter of the cfd parallel speed calculation. Quote:

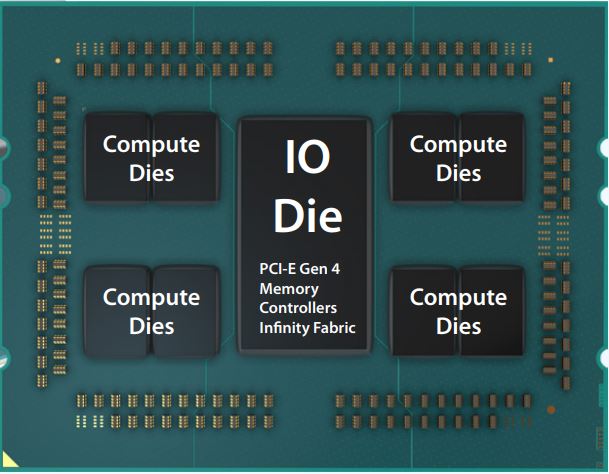

A few days ago I was learning about this subject, and I learnt that the Front-side bus (FSB) that was in the old days connecting the CPU with the memory controller doesn't exist anymore nowadays because the memory controller is now integrated inside the CPU. So I really agree with you on this point. However I wouldn't say that the PCI-E is a kind of an intruder relatively speaking to the field of the cfd performance calculation, because I have heard that this is half the number of lines of PCI-E that belongs to each CPU which determines the speed of communication between several CPUs on a motherboard. 64 PCI-E lines for each Epyc CPUs against something that seems to be lower concerning the communication between several Intel CPUs on a motherboard ... Explaining probably the difference of calculation performance between the two brands of CPU. (for the 64 lines I found those informations on the wikipedia Epyc web page https://en.wikipedia.org/wiki/Epyc) Furthermore, even knowing that memory controllers are now integrated inside the CPUs, and that the FSB disappeared a long time ago. Yesterday I was looking for informations on that subject and I found architectures schemes of the CPU Epyc 7002 generation that suggest that the PCI-E is playing an important role inside the CPU architecture even if right now I don't exactly know what kind of role it is playing. (scheme : https://www.microway.com/hpc-tech-ti...me-chipletdie/)  And seriously speaking if the PCI-E plays an important role inside the CPU, so why not in the RAM operations ? Because today, memory controllers are integrated inside the CPU architecture. 2nd update: I have found more informations about Epyc CPUs architecture, schemes that are describing memory channel and PCI-E more like 2 different lines connecting very close to each other but with a different and perpendicular direction for each one. They are more like crossing each other but not overlaying. So I think they are not sharing a bandwidth And so that respond to my question. Consequently we can say that the PCI-e 4.0 doesn't solve the RAM Channel problem. But this was a difficult question to know the reel differences between PCI-e and RAM channel as for example the person who wrote the wikipedia AMD Epyc page made the response to this question a little bit ambiguous in writting "eight DDR4 SDRAM and PCIe 4.0 channels" to describe the AMD CPU in its article, like if PCI-e was also the channels ... But it's not the case. Thank you Maphteach Last edited by Maphteach; January 7, 2020 at 12:40. |

|||

|

|

|

|||

|

|

|

#246 |

|

New Member

Clément

Join Date: Dec 2019

Location: France

Posts: 6

Rep Power: 6  |

Hello,

Havref, is it possible to know which version of infiniband you have used to realize your Epyc dual servers test and getting such high results !? I mean which one of these versions, to know the bandwidth of your connection : 10 Gbit/s (SDR, Single Data Rate), 20 Gbit/s (DDR, Double Data Rate), 40 Gbit/s (QDR, Quad Data Rate), 56 Gbit/s (FDR, Fourteen Data Rate), 100 Gbit/s (EDR, Eighteen DataRate) or 200 Gbit/s (HDR, High Data Rate) ? Thank you Maphteach

__________________

If you want to learn more about myself, and about what I am working on : https://www.maphteach-cfd-simulations.fr/ |

|

|

|

|

|

|

|

|

#247 | |

|

New Member

Erik

Join Date: Jul 2019

Posts: 7

Rep Power: 6  |

Quote:

Minimum Infiniband FDR 40 Gbits would be fine to get good results as i ve seen the comparison between 1 Gbit, 10 Gbit and Infiniband FDR 40 Gbit at https://www.hpcadvisorycouncil.com/p...l_2680_FCA.pdf So its all about lowing latencies between the network connections, and a good infiniband network connection can handle it to achive remarkable results. But scaling would be best for using more than just 2 nodes. For the purpose of building a dualnode cluster 1 Gbit is ok. |

||

|

|

|

||

|

|

|

#248 |

|

New Member

Clément

Join Date: Dec 2019

Location: France

Posts: 6

Rep Power: 6  |

Thank you erik87.

That's an interesting post and response. Maphteach

__________________

If you want to learn more about myself, and about what I am working on : https://www.maphteach-cfd-simulations.fr/ |

|

|

|

|

|

|

|

|

#249 | |

|

New Member

Clément

Join Date: Dec 2019

Location: France

Posts: 6

Rep Power: 6  |

Does anyone has tried with more than 40 Gbits/s for the inifiniband bandwidth ?

Quote:

I think the best solution would be to have an inifiniband bandwidth equal to the bus connecting two Epyc CPUs on a motherboard. That's maybe possible because HDR supports up to 200Gbits/s. But a very expensive solution I know it ... A HDR card is at something like 1000 usd. That means 1000 usd for each node. A very high price ! Thanks Maphteach

__________________

If you want to learn more about myself, and about what I am working on : https://www.maphteach-cfd-simulations.fr/ Last edited by Maphteach; January 13, 2020 at 20:57. Reason: quoting havref |

||

|

|

|

||

|

|

|

#250 | |

|

New Member

Clément

Join Date: Dec 2019

Location: France

Posts: 6

Rep Power: 6  |

Quote:

Is raptorcs.com your website ? What I am looking for are the LaGrange/Monza packages witth more than 4 channel memory ! Please say me where I can't find this kind of quality products for high level computation ? Thank you Maphteach

__________________

If you want to learn more about myself, and about what I am working on : https://www.maphteach-cfd-simulations.fr/ |

||

|

|

|

||

|

|

|

#251 |

|

New Member

Matthew Sparkman

Join Date: Jan 2020

Posts: 1

Rep Power: 0  |

OS- Ubuntu 16.04

OpenFOAM 4 CPU- Auto RAM- AUTO MODE (UMA) 3200CL16 # cores Wall time (s): ------------------------ 1 800.82 2 440.71 4 240.89 6 198.61 8 185.58 CPU- Auto RAM- CHANNEL MODE (NUMA) 3200CL16 # cores Wall time (s): ------------------------ 1 773.59 2 446.37 4 230.31 6 181.91 8 158.45 CPU- Auto RAM- CHANNEL MODE (NUMA) 3400CL16 # cores Wall time (s): ------------------------ 1 746.15 2 427.8 4 206.95 6 182.88 8 150.72 CPU- 4.2 ALL CORE RAM- CHANNEL MODE (NUMA) 3400CL16 # cores Wall time (s): ------------------------ 1 738.21 2 421.37 4 204.84 6 176.33 8 150.08 Analysis- A 9.6% all core overclock yielded 0% gains. UMA to NUMA topology change yielded a 14.5% advantage. A 5.9% RAM overclock yielded a 4.9% advantage. Conclusion- Gen 1 Threadripper is bandwidth starved in CFD applications. I have a 1920X on the way and I will confirm that Threadripper hits a wall at 12 cores. Edit- SMT was OFF for all runs. |

|

|

|

|

|

|

|

|

#252 |

|

New Member

Solal Amouyal

Join Date: Sep 2019

Posts: 3

Rep Power: 6  |

Hi everyone,

Here are the performance results for 2x Intel Xeon 6240 (18 cores @ 2.6 GHz), RAM 32GB x 12 DIMM DDR4 Synchronous 2933 MHz running on CentOS Linux release 7.7.1908 (Core). The following results are for 1 node with 2 CPUs. I'll soon try with multiple nodes to test scaling - Infiniband is used so I expect good results for that as well. # cores Wall time (s) Speedup ---------------------------------- 1 819.96 - 2 457.78 1.79 4 200.44 4.09 6 135.14 6.07 8 102.61 7.99 12 72.34 11.33 16 58.81 13.94 18 54.46 15.06 20 51.13 16.04 24 46.85 17.05 28 43.78 18.72 32 41.62 19.70 36 40.74 20.13 Near-perfect results up to 12-16 cores, then scaling drops. From reading the early posts, I assume it's related to the memory channels available. There is however one issue, I am getting the following error after the iterations are done, and after the writing of solution files: [9] [9] [9] --> FOAM FATAL ERROR: [9] Attempt to return primitive entry ITstream : IOstream.functions.streamLines.seedSampleSet, line 0, IOstream: Version 2.0, format ascii, line 0, OPENED, GOOD primitiveEntry 'seedSampleSet' comprises on line 0: word 'uniform' as a sub-dictionary [9] [9] From function virtual const Foam::dictionary& Foam:  rimitiveEntry::dict() const rimitiveEntry::dict() const[9] in file db/dictionary/primitiveEntry/primitiveEntry.C at line 184. [9] Any idea how to resolve it? These errors are messing with my profiling

|

|

|

|

|

|

|

|

|

#253 |

|

Super Moderator

Alex

Join Date: Jun 2012

Location: Germany

Posts: 3,399

Rep Power: 46   |

Probably related to streamlines. Here are the workarounds, unfortunately these were never included in the original post.

OpenFOAM benchmarks on various hardware |

|

|

|

|

|

|

|

|

#254 | |

|

New Member

Solal Amouyal

Join Date: Sep 2019

Posts: 3

Rep Power: 6  |

Quote:

Thanks for the fast answer. That actually solved it and I was able to run IPM to get an estimation of the MPI time. As expected, communication is relatively low with MPI taking about 15% of the total wall time when using 36 cores. Therefore the lack of scaling when using more than 12 cores is most likely memory bound. |

||

|

|

|

||

|

|

|

#255 |

|

New Member

Jan

Join Date: Apr 2018

Location: Czechia

Posts: 3

Rep Power: 8  |

Hi,

i run the benchmark on 2x xeon 2687w v2 (3.4GHz) (edited) - 8x8GB 2Rx4 DDR3-1600 PC3-12800R ECC vlp - with OF 7 - Elementary os 5.1.2 - kernel 5.3.0-40-generic HyperThreading: off NUMA setting: on The run itself: # cores Wall time (s): ------------------------ 1 901.43 2 485.12 4 219.16 6 157.99 8 132.82 12 110.53 16 102.02 Meshing: 1 25m43,839s 2 17m15,793s 4 9m54,322s 6 6m55,330s 8 5m46,486s 12 5m46,486s 16 4m37,098s The scaling for 16 is not the best ~8.8. I used the two scripts from flotus1 and they took around a second from the run on all cores, so thank you for the tip. Last edited by Han_sel; February 23, 2020 at 04:47. Reason: Can’t remember specs - base frequency is 3.4GHz thank you @Simbelmynë |

|

|

|

|

|

|

|

|

#256 |

|

Senior Member

Join Date: May 2012

Posts: 546

Rep Power: 15  |

@Han_sel

Those numbers seem really good, especially considering you only use 1600 MHz memory modules. The highest Ivy Bridge results (in this thread) are around 1 iteration/s and with 1866 MHz memory, so your setup is definitely doing something good! You write 3.5 GHz, since the official base frequency is 3.4 GHz, does it mean that you have done some light overclock (fsb overclock perhaps)? Since the all-core turbo-boost is 3.6 GHz this is a bit puzzling though. Anyhow, seems to work great. |

|

|

|

|

|

|

|

|

#257 | |

|

New Member

Jan

Join Date: Apr 2018

Location: Czechia

Posts: 3

Rep Power: 8  |

Quote:

I have looked at others results and now I wonder as well why it performs |

||

|

|

|

||

|

|

|

#258 |

|

Super Moderator

Alex

Join Date: Jun 2012

Location: Germany

Posts: 3,399

Rep Power: 46   |

I think the results are well within expectations. Here are my results with the predecessor, Xeon E5-2687W"v1" OpenFOAM benchmarks on various hardware

Performance-wise, many of the benchmark numbers posted here are on the lower end of the spectrum. Running benchmarks is hard, especially if you want consistently good results. |

|

|

|

|

|

|

|

|

#259 | |

|

New Member

Jan

Join Date: Apr 2018

Location: Czechia

Posts: 3

Rep Power: 8  |

Quote:

|

||

|

|

|

||

|

|

|

#260 |

|

New Member

Kurt Stuart

Join Date: Feb 2020

Location: Southern illinois

Posts: 19

Rep Power: 6  |

Dell R820 4x E5-4640 2.6ghz 16x4gb PC312800 This was my first run, I'm hoping with some work it can go better. This cost me $520 shipped.

# cores Wall time (s): ------------------------ 1 1137.55 2 619.25 4 264.8 6 187.7 8 142.35 10 125.07 12 105.16 14 96.76 16 85.26 18 85.96 20 77.75 22 78.91 24 71.71 26 75.19 28 69.97 32 72.9 |

|

|

|

|

|

|

|

|

Similar Threads

Similar Threads

|

||||

| Thread | Thread Starter | Forum | Replies | Last Post |

| How to contribute to the community of OpenFOAM users and to the OpenFOAM technology | wyldckat | OpenFOAM | 17 | November 10, 2017 15:54 |

| UNIGE February 13th-17th - 2107. OpenFOAM advaced training days | joegi.geo | OpenFOAM Announcements from Other Sources | 0 | October 1, 2016 19:20 |

| OpenFOAM Training Beijing 22-26 Aug 2016 | cfd.direct | OpenFOAM Announcements from Other Sources | 0 | May 3, 2016 04:57 |

| New OpenFOAM Forum Structure | jola | OpenFOAM | 2 | October 19, 2011 06:55 |

| Hardware for OpenFOAM LES | LijieNPIC | Hardware | 0 | November 8, 2010 09:54 |