|

|

|

[Sponsors] | |||||

|

|

|

#1 |

|

New Member

Sergey

Join Date: Jan 2018

Posts: 18

Rep Power: 8  |

In my work I use 4 servers:

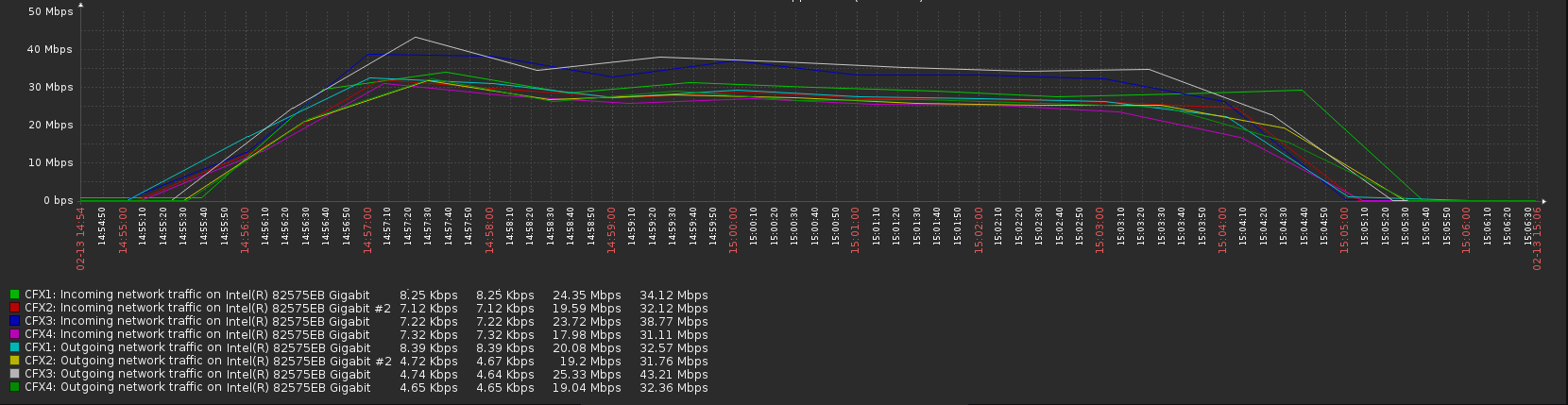

2 CPU x Intel Xeon X5660 @ 2.8GHz (6 core) = 12 total 64 GB memory DDR3 1333 MHz 1 TB SSD SATA I perform a sewer pump calculation with 100 iterations in program ANSYS CFX(Platform MPI Distributed Parallel). Maximum network load during the calculation is 45 MBit/s. The question is: why my calculations are not using full ethernet capasity? At the same time, all specialists recommended me to use infiniband. Maybe I am doing something wrong? How can I increase my claster's productivity?

|

|

|

|

|

|

|

|

|

#2 |

|

Senior Member

Join Date: Mar 2009

Location: Austin, TX

Posts: 160

Rep Power: 18  |

With just 4 nodes, especially ones that are so old, I am not surprised to see network utilization that low.

Also keep in mind that every thread isn't constantly using the network. There is only network traffic when the calculations are at the boundaries between the machines. I wouldn't bother with Infiniband for your setup. |

|

|

|

|

|

|

|

|

#3 |

|

New Member

M-G

Join Date: Apr 2016

Posts: 28

Rep Power: 9  |

It's not about bandwidth.

It's about latency. Gigabit Ethernet latency is 100 times more than Infiniband. Latency will decrease performance severely in CFD problems. |

|

|

|

|

|

|

|

|

#4 |

|

Super Moderator

Alex

Join Date: Jun 2012

Location: Germany

Posts: 3,398

Rep Power: 46   |

This. And in addition to that:

Not fully utilizing the interconnect bandwidth is not a sign of a bottleneck in this area. With only 4 relatively slow nodes Ethernet can deliver similar performance as Infiniband. The bottleneck is the performance of the compute nodes. You can run a node scaling analysis to verify that everything is fine. I.e. compare the run time of a sufficiently large case when distributing on 1, 2, 3 and 4 nodes. Or even more professional: instead of assuming that a case is sufficiently large, measure weak scaling instead. |

|

|

|

|

|

|

|

|

#5 | |

|

New Member

Qin Zhang

Join Date: May 2012

Posts: 10

Rep Power: 13  |

Quote:

I encountered similar issue. I also setup a four nodes cluster with 24 cores each nodes, namely 96 nodes in total. I have tried a large case distributing on 1,2,3,4 nodes. The results is confusing, for walltime, there is no much difference between 24 cores and 48 cores, but for 96 cores, the walltime can be reduced into half. I also try different mesh size. Similar trend is found. Is that means I should go to IB? Or upgrade to 10G interconnect? The later one can be much cheaper and affordable than IB. Looking forward your advices Many thanks. Qin |

||

|

|

|

||

|

|

|

#6 |

|

Super Moderator

Alex

Join Date: Jun 2012

Location: Germany

Posts: 3,398

Rep Power: 46   |

First things first: if you observe zero scaling from 1->2 nodes, but then 100% scaling going from 2->4 nodes, there is probably something wrong with your benchmark. This deserves a closer look before drawing expensive conclusions.

The cost of Infiniband depends on one thing only: can you buy used hardware. Used Infiniband gear is pretty cheap on ebay, due to rather large supply and low demand. When it comes down to the decision between upgrading to 10G Ethernet or Infiniband, with brand-new hardware: get Infiniband. 10G Ethernet is not cheap either, and chances are it does not increase scaling that much in cases where gigabit Ethernet does not cut it. |

|

|

|

|

|

|

|

|

#7 | |

|

New Member

Qin Zhang

Join Date: May 2012

Posts: 10

Rep Power: 13  |

Quote:

Thank you for your reply. The network load is 40~50Mbit/s for two nodes, and 10~20 Mbit/s for four nodes. Is that suggest the four nodes have better load balancing than two nodes, therefore 100% scaling is achieved? I have bind processors to core when I conduct mpirun. Is there any other option should I add to mpirun for optimizing the scaling? Many thanks, Qin |

||

|

|

|

||

|

| Thread Tools | Search this Thread |

| Display Modes | |

|

|

Similar Threads

Similar Threads

|

||||

| Thread | Thread Starter | Forum | Replies | Last Post |

| Viability of Sun T5120 (UltraSPARC T2) for CFD | Leonux | OpenFOAM Running, Solving & CFD | 2 | February 10, 2017 12:42 |

| Write speed with CFX using 2 nodes and 1gbps ethernet | kjetil | Hardware | 0 | February 3, 2016 08:10 |

| Parallel speedup Fluent Gigabit Ethernet Myrinet etc | tehache | OpenFOAM Installation | 5 | September 7, 2009 05:51 |

| parallel Fluent on two PCs connected via ethernet! | jack | FLUENT | 0 | December 2, 2008 11:55 |